Building a Secure Scalable GenAI Assistant

Revolutionizing federal benefits with GenAI

Challenge

A major federal benefits program faced a challenge familiar across large software factories: speed and data accessibility. The developers and product teams needed a faster, more intelligent way to access documentation, code examples, knowledge bases, and AI agent capabilities within a secure cloud environment. Existing chatbot tools were outdated. Although good for retrieving text, they were unable to support workflows, orchestrate agents, or integrate with an expanding GenAI ecosystem. “We didn’t want just another chatbot,” says Robert Ha, the technical lead on the project. “We needed something that could actually help developers work, such as automation, orchestration, and true integration with internal tools.”

Information lived across multiple repositories and locations, including wikis, documentation portals, code notebooks, and tenant-specific resources. Developers spent valuable time searching across systems or repeating the same questions in support channels. The organization needed a modern, scalable AI assistant that could streamline productivity and model the right way to safely operationalize GenAI inside a high-security cloud environment.

Solution

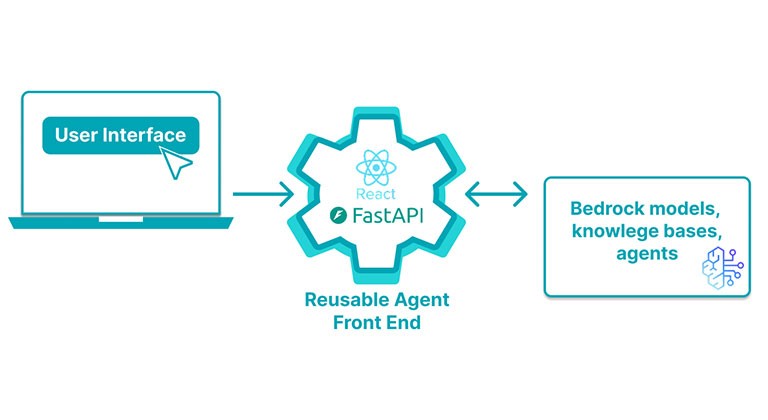

To meet this need, the Booz Allen team designed and built a next-generation GenAI assistant, an AI-powered, multi-tenant platform constructed on Amazon Web Services (AWS) Bedrock, FastAPI, React micro-frontends, and Strands for emerging agent orchestration. The solution integrates RAG-enabled search, a unified conversational interface, and automated agent workflows into a reusable, extensible framework. “This system is the exemplar,” says Jared Ross, a lead developer of the platform. “It shows teams exactly how to use Bedrock LLMs in a secure environment without the overhead of hosting models themselves.”

The architecture supports persistent conversation history, ties directly into documentation pipelines, and includes a plug-and-play micro-frontend that tenants can adapt for their own AI-enabled experiences. Designed for long-term flexibility, the assistant can evolve as Bedrock introduces more advanced agent capabilities and orchestration patterns. As Ha notes, “We want this to be the GenAI playground for the entire ecosystem where teams can interact with their agents and knowledge bases through natural language.”

The Booz Allen team designed and built a next-generation GenAI assistant, an AI-powered, multi-tenant platform constructed on Amazon Web Services (AWS) Bedrock, FastAPI, React micro-frontends, and Strands

The Booz Allen team designed and built a next-generation GenAI assistant, an AI-powered, multi-tenant platform constructed on Amazon Web Services (AWS) Bedrock, FastAPI, React micro-frontends, and Strands

Impact

The GenAI assistant is already transforming how teams access information and collaborate on the platform. Early usage shows a meaningful reduction in support channel traffic as users transition from manual searches to automated, contextual responses through the assistant’s RAG capabilities. “Instead of digging through repos or pinging someone, you can just ask the assistant,” Ross says.

The platform’s ability to ingest code examples from notebooks and offer AI-driven coding guidance has further accelerated development workflows. In the near future, the assistant will also serve as the main interface for tenant-built AI agents, giving teams a unified space to test and interact with their GenAI capabilities without needing console access or custom tooling. “The backend tech is complex, but the experience is simple,” says Ha. “That’s what gets people to actually use it.

By creating a standardized, secure, and scalable path for integrating AI into a large federal benefits ecosystem, the agency has established a foundation for AI-enhanced development that reduces cognitive load, improves engineering efficiency, and accelerates mission delivery across one of the government’s most complex platforms.

Summary

- Reduced support channel traffic as users shift from manual questions to AI-driven

- Retrieval-Augmented Generation (RAG) search

- Faster developer onboarding through centralized access to documentation, code examples, and notebooks

- Improved developer productivity with AI-assisted code guidance and embedded notebook search

- Decreased time spent searching for information across Confluence, GitHub Wikis, and repos

- Lower cognitive load for engineering teams through automated retrieval and streamlined workflows

- Increased reuse and standardization with a multi-tenant, plug-and-play framework

- Accelerated adoption of GenAI across the platform via a consistent, secure interface for interacting with Large Language Models (LLMs) and agents

Tech Stack

Cloud + AI Platform

- AWS Bedrock (LLMs, Knowledge Bases, Agent Frameworks)

- Anthropic Claude models

- Emerging Bedrock Agents and Strands orchestration

Application Architecture

- FastAPI backend

- React micro-frontends

- Multi-tenant chat interface

- Persistent conversation management (DynamoDB + custom session logic)

AI + Knowledge Integration

- RAG-enabled search

- Embedding pipelines

- Document ingestion (GitHub Wikis, Confluence, Jupyter Notebooks, etc.)

Automation + Engineering

- CI/CD pipelines for updates and retraining

- Modular component framework for tenant reuse

- Infrastructure and orchestration aligned to GovCloud constraints